The history of entrepreneurship is filled with tales of how the audacity of great entrepreneurs has triumphed over even the most prepared minds and investment theses. Savvy investors recognize this and prioritize discovering exceptional founders over getting bogged down in technological trends. Indeed, the best investment thesis is often formulated by founders who have been obsessively thinking about an idea maze.

However, the fear of missing out on the AI steamroller is causing panic and false narratives to spread. The conventional wisdom of Twitter echo chambers, the marketing white papers of venture capital firms, and the so-called "cool contrarian thinkers" lack the rigor of truth-seeking. As Warren Buffett said, "A contrarian approach is just as foolish as a follow-the-crowd strategy. What's required is thinking, not polling."

I'll discuss key questions by drawing on lessons from the drivers of the technology boom of the past 50 years and identify areas where generational companies might get built.

The Fear of Missing Out on the AI Wave

"Just as we could have rode into the sunset, along came the Internet, and it tripled the significance of the PC.'' Andy Grove

In 1968, Douglas Engelbart showcased the fundamental elements of modern personal computing. He introduced concepts like windows, hypertext, graphics, navigation, video conferencing, and the computer mouse. He even demonstrated a collaborative real-time editor. However, it took several decades and numerous companies, such as Apple, Intel, and Microsoft, and even some recent ones like Zoom, Replit, and Figma, to turn Engelbart's vision into reality.

Chatgpt is similar to the Engelbart demo. It will take years, and through multiple phases, AI's full potential will be realized. When a technology is important to one or more significant players in the industry, the odds are in that company’s favor, and the new entrants are unlikely to succeed. Therefore, in the short term, AI is more of a sustaining innovation benefiting established companies. However, over the long term, as Andy Grove would probably say, "All companies will be AI companies."1

Compute, infra, and apps

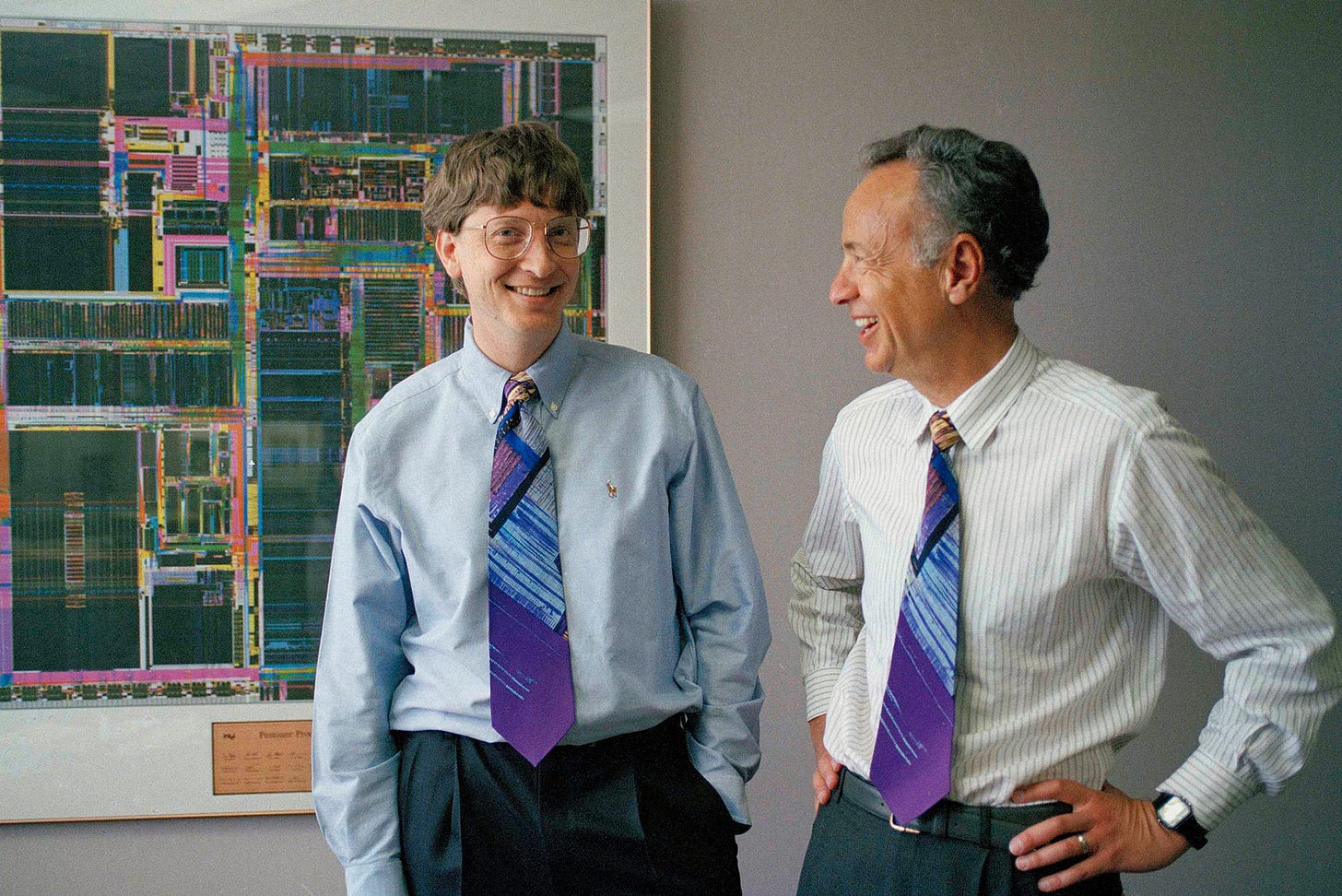

“What Andy giveth, Bill taketh away.” Andy and Bill’s Law

In the 90s, there was a humorous one-liner in computer conferences: “What Andy giveth, Bill taketh away.”2 I would argue the same will be true about GPUs for the foreseeable future. Indeed, top AI talents are entertaining the idea of $10B models.3 For decades, Intel retained over 80% market share in PCs, Data Centers, and High Power Computing.4 Only platform shift to mobile and increased demand for parallel processing tilted the power to Apple, Qualcomm, Nvidia, and AMD.

CUDA (Compute Unified Device Architecture) is Nvidia's closed-source, proprietary software programming layer. It has a robust ecosystem of developers and a wide variety of specific applications for use cases ranging from self-driving cars to biology.5 CUDA is a classic example of hardware-software integration, as it creates a moat around Nvidia's GPUs.

In addition to CUDA, Nvidia is also innovating on all three fundamental components of computing: CPUs, GPUs, and DPUs. The performance-price ratio of Nvidia's GPUs is doubling every 2-2.5 years.6 This is similar to Moore's Law, and it will allow AI models, infrastructure, and applications to operate in a constant state of deflation, creating an opportunity for patient investors and entrepreneurs with conviction.

Jensen Huang, the founder of Nvidia, started the company as a graphics chip designer. Over the years, he has transformed the company's focus several times, branching out into the car industry, crypto, and now AI. Behind the scenes, he is planting the seeds of future markets in robotics and biology that are likely to be even larger than the company's current presence. Surprisingly, most VCs are not paying attention to these emerging markets.

Despite Nvidia's leadership in GPUs, there are still opportunities for investment in the compute layer. As Jobs famously said, “It’s very subtle to talk about strength and weakness because they’re almost always the same thing.” First, open-source parallel programming layers like OpenAI Triton7 are portable, which means they can be used on different types of hardware.8 It is highly likely that other chip manufacturers such as Intel and AMD, as well as cloud providers like Google, Amazon, and Microsoft, will support and subsidize a new open-source platform to challenge Nvidia's dominance. Additionally, GPUs are great for training but overshoot in inference, which creates a need for inference-efficient hardware. AMD is already exploiting Nvidia’s weakness in inference. Finally, running AI models on application-specific chips (ASIC) has many benefits including zero training costs, optimized performance, and more privacy. In a recent interview, Johny Srouji, Apple’s SVP of Hardware Technologies, alluded that Apple aims to run AI on its devices, as evidenced by the trajectory of innovation in its neural chips.

“The myth of Infrastructure Phase”9, put forth by folks at USV, argues that apps and infrastructure evolve in responsive cycles. Planes (the app) were invented before airports (the infrastructure), cars before roads, lightbulbs before grids, and video streaming before the cloud. At the same, the language model enabling applications like Copilot for sales is an application in itself, enabled by GPUs.

Thanks to its simplicity and easy integration, ChatGPT enabled developers to experiment with a range of app concepts, such as copilots and agents. This resulted in an influx of venture capitalists investing in the initial wave of AI infrastructure, which included vector databases, frameworks for expanding language models, and services that simplify model deployment. However, modularization starts only after the successful adoption of integrated solutions, which is yet to happen. There are still many unanswered questions regarding the optimal technology stack. Current solutions, such as RAG and language frameworks, are overly complex and may not be necessary.10

In summary, the interplay between infrastructure and application layers is an evolving, multifaceted process. It is difficult to predict with certainty how these cycles will unfold, but it is clear that they will be nonlinear and expand over a much longer timescale.

Hitting the Oil Wall

“Everybody wins. Music companies win. The artists win. Apple wins. And the user wins because he gets a better service and doesn’t have to be a thief.” Steve Jobs

In a world where OpenAI, Claude, Inflection, and Bard all scrape almost the same data from the internet and use the same architecture, what are the true sources of differentiation and competitive advantage?

The contentious battle over data scraping is a Napster moment. A group of leading publishers and Danny Diller is suing AI models that have been trained on their data.11 And Reddit and Twitter, with publicly accessible data, have taken steps to block scraping to safeguard their intellectual property.12 This trend will likely accelerate. It is critical for technology companies to find a way to share credit for the data they use. After all, the brilliance of the iPod was its ability to partner with music owners.

From a technology standpoint, further progress in LLMs hinges on answering two critical questions:

Is it possible to improve LLMs’ performance by utilizing synthetic data?

Are their new architectures on the horizon that can reduce dependency on large datasets (single-shot training) and enable intricate reasoning and planning?

Financial and consulting companies such as Thomas Reuters, Bloomberg, and McKinsey, pharmaceutical giants like GSK, and electronic design automation (EDA) tools such as Cadence and Synopsys possess enormous valuable data. It is unclear how these companies will employ AI in their distinctive datasets, as not all of them have the technical expertise to do so. This presents an opportunity for infrastructure companies to enable enterprise clients to train their own AI models.

Copyright concerns have a cascading effect on enterprise clients to get value from their data. It's important to note that most of the enterprise data is still not ready for training, and 50-80% of partnerships fail in the first few years. Therefore, in the short term, the biggest winners will be cloud providers.

All things considered, enterprise AI will take longer than the initial expectations after the release of ChatGPT, and without new breakthroughs in synthetic data and model architectures, LLMs are reaching points of diminishing returns.

Me too Products

“It's not that we need new ideas, but we need to stop having old ideas.” Edwin Land

When Jobs visited Xerox PARC, he got to observe three breakthrough technologies, each defining more than a decade in computer technology: graphical computer interfaces, object-oriented programming, and networked computer systems. Later on, he said, "I was so blinded by the graphical user interface that I didn't even really see the other two."13 Fast forward to 2023, after getting a demo of ChatGPT, Gates wrote, "I knew I had just seen the most important advance in technology since the graphical user interface."14

Disruptive innovations are (a) a cheap alternative targeting the low end of the market and/or (b) a simple solution targeting customers who lack skills by lowering the learning curve of existing solutions.15 Midjourney is an example of a disruptive AI product with a simple, affordable, and foolproof solution targeting non-consumption.

Large language models are enormously powerful, creating opportunities for bottom-up AI products to leapfrog the competition. However, the current wave of applications is sustaining innovations as they’re either an extension of incumbent platforms or easily integrated with existing workflows, allowing big players to kill startups with each release.

From a user experience standpoint, AI is not natively integrated with the interface. Instead of starting ideas on a blank canvas, designers are constrained by the space available within existing products. This limits value creation across the user journey.

There is also a lack of business model innovation to counter and exploit the incumbent’s strength. Indeed, most startups are focused on convincing existing customers to switch rather than identifying and targeting new segments.16 Compare it to the first winners of the internet era that successfully used its advantage to unlock distribution. PayPal’s rapid iterations on the market, from transferring money through email to integrating the checkout button for eBay power sellers, were key to its success. It’s yet to be seen how AI is being applied in new markets or utilized as a catalyst to unlock distribution.

Finally, there is a lack of ambition to pursue AI in multidisciplinary domains like healthcare and synthetic biology. After all, curing diseases, advanced materials, and energy transition are opportunities to create and monopolize new gigantic markets.

Platform Wannabes

“I believe it was the Sun marketing people who rushed the thing out before it should have gotten out.” Alan Kay

A product is typically proprietary and controlled by one company, while an industry platform is a foundational technology or service that is essential for a broader, interdependent ecosystem of businesses.17 The history of technology shows that platform wannabes need to play both technology and business games.18

A big part of Google's success is attributed to its unique business model. Indeed, Google iterated on three different business models before its employee number 9, Salar Kamangar, came up with the keyword auction model. Google found a way to make money on the Internet and changed the advertising industry by transforming the dynamics between advertisers and Internet users. Currently, there are very few, if any, business model innovations to monetize large language models.

Secondly, when a company dominates one platform market, it can use that power to enter other platform markets. Once a comparable product is free, competitors have little choice but to make their product free or find other ways to make money. When Microsoft released Internet Explorer, Netscape underestimated the power of this dynamic.19 That's why it's too early to underestimate Google, cloud providers, and social media platforms.

Third, platform leaders enjoy power law distribution and competition among their complements.20 However, not every market has a platform leader, and not all value necessarily accrues to platform leaders.

In markets where there is sufficient space for differentiation, various platforms can coexist. The video console game market is shared by Sony, Microsoft, and Nintendo. Similarly, it is quite possible that we may see a future with multiple specific models.

Linux is a case study that illustrates platform leaders can’t necessarily capture the most value. Linux worked exceptionally well, thanks to the Apache Web server.21 However, when one layer of the “system of use” gets open-sourced, value accrues in other parts of the value chain. In the case of Linux, cloud providers captured most of it.

Open AI's recent releases to differentiate against other LLM providers with cheaper and better dev tools and integrate forward with GPTs to build a value network is the reflection of the AI landscape. To avoid commoditization by cloud providers, AI platform wannabes should innovate on both technology and business models.

Illusion of Moats

“It’s incredibly arrogant for a company to believe that it can deliver the same sort of product that its rivals do and actually do better for very long.” - Warren Buffett

In his 1995 annual shareholders meeting, Buffet coined the word “moat” as the most important factor he’s looking for in a business. He defined his favorite business as “A business with a wide and long-lasting moat around it … protecting a terrific economic castle with an honest lord in charge of the castle.”22

The desire to match Buffett's return is so strong that even VCs are looking for moats in early-stage startups. In reality, companies with the largest moats, like Costco, Apple, and VISA, didn't seem to have any moats early on. Instead, they had accumulating advantages that made operating the business easier as they were growing, allowing them to achieve sustainable value creation with margins higher than their competitors.

The current belief in Silicon Valley is that AI has three moats: data, model (aka talent), and computing power. Interestingly, incumbents and most well-funded AI startups have at least two of the three perceived moats, as the best AI talent has raised billions to buy GPUs.

Moats are not static; they can get narrower or larger.23 Indeed, the capabilities that allowed OpenAI and Claude to build large language models, such as data scraping and GPUs, are becoming widely available over time. At the same time, open-source models by Facebook and Mistra diminish the value of alternatives without proprietary data. That’s why Eric Vishra recently referred to foundational models as one of the fastest-depreciating assets in history.

In summary, if the so-called moats can’t translate into economic advantages like low-cost training and inference, high switching costs, network effects, brand recognition, and efficient scale, large language models will have a hard time achieving sustainable value creation.

Back to the Future

“We tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run.” Amara

In 1978, the US military launched a constellation of 24 satellites to create an accurate navigation system. The main objective was to enable soldiers to deliver ordnance with accuracy. During the 80s, the program faced several financial constraints that nearly led to its cancellation. However, the first operational use of GPS in the Gulf War in 1991 proved its success and persuaded the military of its value. Consequently, the program was fully funded. It took almost thirty years for GPS integration into smartphones, making it an essential part of modern life. AI was overhyped in the ‘60s and ‘80s, and .ai is the early sign of a bubble. However, the long-term potential of AI is underestimated.

From entrepreneurs to policymakers, everyone wants to know how AI will change our lives. Taking a page from Munger's book, maybe the right question is the opposite: "What's not going to change in the next ten years?"

Programs should be developed and deployed quickly on reliable, cost-competitive infrastructure. Businesses need tools to increase productivity and enable better decision-making. Consumers always prefer affordable services, the ability to do more, and easy-to-use products.

Language models will simplify interfaces and flatten the learning curve of existing tools, disrupting non-consumption and creating new markets. Intelligence functions (abstraction, logic, problem-solving, reasoning, planning, critical thinking, emotional thinking, and creativity) will create new software categories, decreasing costs in the service sector, particularly healthcare, legal, education, professional, and creative services. There will also be a host of apps in art, education, finance, entertainment, and commerce.

The transition from logic machines to probabilistic machines and the emergence of new bottlenecks like memory will usher in a new architecture for computing and could revive analog processors, particularly for edge devices.

The tech stack to build robots (interface, perception, and navigation) is finally available, and digital twins will be the foundation for training robots and digitizing factories.

Finally, transformer models, simulators, and AI solvers for physics, biology, and chemistry will revolutionize research and discovery and unlock the world of atoms by unleashing synthetic biology.

“I have been quoted saying that, in the future, all companies will be Internet companies. I still believe that. More than ever, really.” Andy Grove

https://developer.nvidia.com/about-cuda

https://epochai.org/blog/trends-in-gpu-price-performance

https://openai.com/research/triton

https://www.usv.com/writing/2018/10/the-myth-of-the-infrastructure-phase/

https://thehill.com/policy/technology/4100533-diller-confirms-plans-for-legal-action-over-ai-publishing/

https://www.nytimes.com/2023/04/18/technology/reddit-ai-openai-google.html

https://www.bhooshan.com/2017/12/07/quotes-steve-jobs-lost-interview/#:~:text=On%20Discovering%20The%20GUI%20Experience%20at%20Xerox%20PARC&text=I%20went%20over%20there.,really%20see%20the%20other%20two.

https://www.gatesnotes.com/The-Age-of-AI-Has-Begun

https://hbr.org/2015/12/what-is-disruptive-innovation

https://hbr.org/2005/10/every-products-a-platform

https://sloanreview.mit.edu/article/how-companies-become-platform-leaders/

https://hbswk.hbs.edu/archive/platform-leadership-how-intel-microsoft-and-cisco-drive-industry-innovation-do-you-have-platform-leadership

https://sloanreview.mit.edu/article/how-companies-become-platform-leaders/

https://sloanreview.mit.edu/article/how-companies-become-platform-leaders/

https://buffett.cnbc.com/1995-berkshire-hathaway-annual-meeting/

https://www.safalniveshak.com/wp-content/uploads/2012/07/Measuring-The-Moat-CSFB.pdf

https://ai.meta.com/blog/large-language-model-llama-meta-ai/

https://en.wikipedia.org/wiki/Global_Positioning_System

https://www.technologyreview.com/2017/10/06/241837/the-seven-deadly-sins-of-ai-predictions/